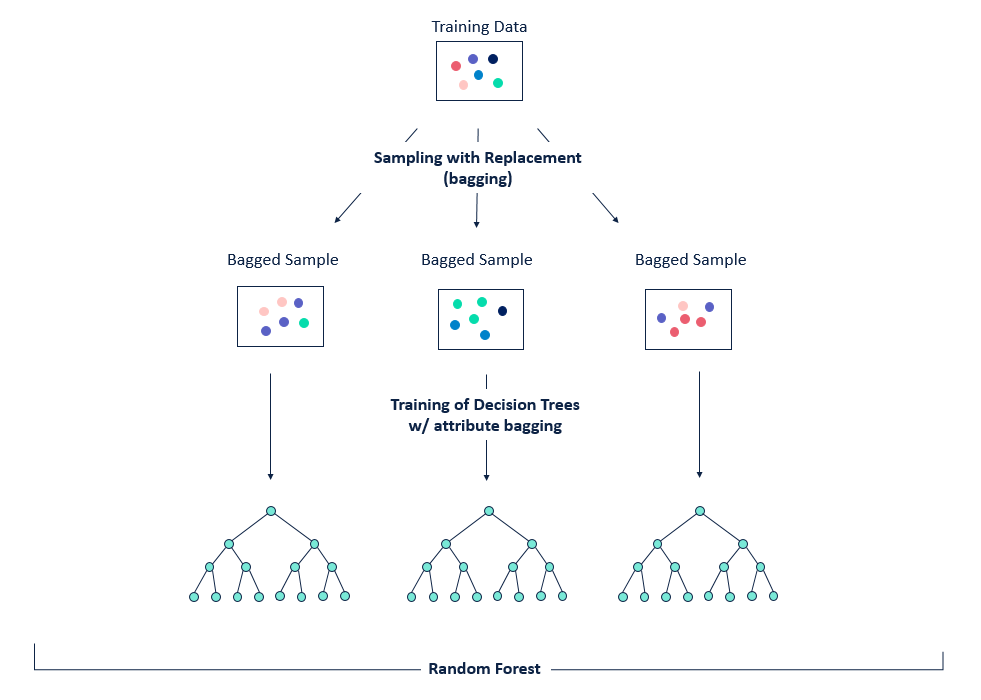

For example, if we split the training data in to two halves, each half produces different results and if there multiple such splits of training data, each subset of training data provide different outcome, such as different numerical value in case of regression or different class in classification setting.īootstrap is an approach to measure and reduce such high variance in a statistical learning method such as Decision Trees. The decision trees discussed typically suffer from high variance. In the following notebooks we will learn about xGBoost and Boosted Trees, special types of Boosted models.īagging or Bootstrap Aggregating is an ensemble algorithm designed to improve accuracy of other Machine Learning algorithms such as Decision Trees by using a concept called bootstrap. Ensemble models are computation intensive.īagging, Boosting and Stacking are some ensemble techniques/improvements on Decision Trees to provide higher accuracy. However, certain ensemble methods (like Random Forests) use the same learning model and still yield excellent results very quickly. Generally, the more varied the learning models within the ensemble, the more robust the results are observed to be. The accuracy of the ensemble learning model is often better than any of the individual weaker models that were combined to create the ensemble model. These learning models may run parallelly or sequentially and they may learn from each other, thus compounding learning in certain ensemble techniques. Ensemble Models or Ensemble Learning is the method of combining many weaker learning models together in order to provide better prediction capability. However, simple Decision Trees typically are not competitive with the best supervised learning approaches in terms of prediction accuracy. Decision Trees are simple and useful for interpretation. For each data point, the decision tree provides a unique path to enter the class that is defined by a leaf. Data points are assigned to nodes in a mutually exclusive manner. Splitting rules are applied on each branch creating a hierarchy of branches which form the decision tree. Bottom nodes which do not branch out are called leaves. A node which branches out is called a decision node. A segment or branch is formed by a splitting rule on the data above. The root node consists of the entire set of data. Ensemble Models - Bagging, RandomForest and Boosting ¶Īs a recap of Decision Trees, we learnt that it is a computational model that contains a set of if-then-else decisions to classify data and its similar to program flow diagrams.

0 kommentar(er)

0 kommentar(er)